The alarm bells of an impending AI bubble rang loudly at the recent Cerebral Valley conference, unsettling tech enthusiasts, investors, and even founders. Yet miles away at United Nations Headquarters, a different urgency filled the corridors. Officials were reviewing applications for the newly established Independent International Scientific Panel on Artificial Intelligence, 40 experts tasked not with shaping global AI rules, but with assessing risks, opportunities, societal impacts, and emerging trends to inform governments through evidence-based, non-prescriptive analysis. Their mandate, set by a General Assembly resolution, is to synthesize research, identify concerns, and produce annual policy-relevant summaries guiding dialogue without negotiating norms.

While Silicon Valley debates valuations and speculative excess, the UN faces a different dilemma: how to uphold multilateralism in a technological era defined by speed, opacity, and asymmetry. The AI industry moves in quarterly cycles driven by capital and competition; the UN operates through consensus, geographic balance, and deliberation. This mismatch has created tension between a rapidly evolving technological frontier and an international system built for gradual negotiation. Yet many Member States, particularly from the Global South, view this slower, inclusive process as essential to preventing AI from becoming another domain where power consolidates among a few actors while others bear the risks.

The emergence of the AI bubble has only heightened these concerns. Investors are pouring billions into models with uncertain use cases, inflating valuations faster than the technology’s real-world maturity. This speculative momentum fuels pressure to deploy systems quickly, often without sufficient testing, transparency, or alignment with public interests. In such an environment, the UN’s scientific panel becomes critically important. Its role is to cut through hype, corporate claims, and fragmented research to distinguish what is genuinely transformative from what is inflated expectation. Evidence-based assessments can help Member States navigate real risks, tangible opportunities, and areas where caution is essential.

The AI industry is inflating a speculative bubble driven by massive investment, weak evidence, and pressure for rapid deployment. The UN’s newly established Independent International Scientific Panel aims to ground global discussions in research, inclusivity, and public-interest priorities—especially for communities often excluded from technological decision-making. As markets chase quick returns, the UN offers the long-term coordination needed to ensure AI advances equitably and responsibly.

Inside the UN’s push to bring evidence, equity, and global perspective to a tech industry spiraling into speculation.

Meanwhile, those most affected by AI disruptions are often the least represented in global discussions. Farmers dependent on climate patterns, Indigenous communities whose cultural identities are tied to land and language, and coastal populations confronting environmental stress, all stand at the front line of AI’s societal impact. Yet their knowledge systems and experiences are rarely reflected in international debates. Ensuring the panel’s work remains accessible, inclusive, and grounded in diverse social realities is essential if AI is to serve humanity as a whole rather than a privileged minority.

Compounding this challenge, speculative investment trends have diverted funding away from foundational AI research. Venture capital overwhelmingly favors applications with fast returns over work on algorithms, safety science, or interoperable infrastructure. This imbalance risks creating an ecosystem where flashy products flourish while core reliability, fairness, and security research remains underfunded. It also consolidates influence among a narrow set of actors who can dictate not just market direction, but the trajectory of AI itself.

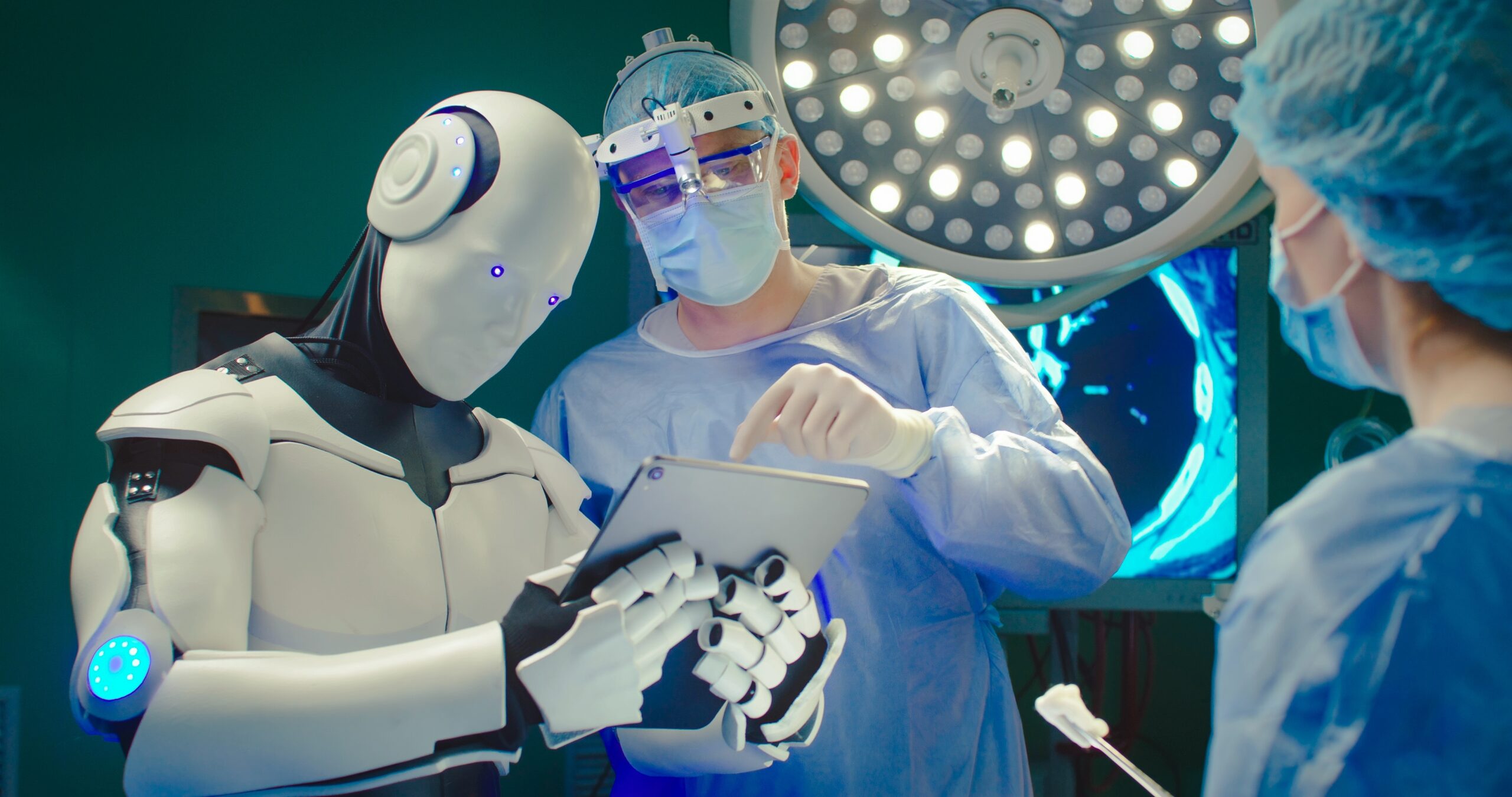

Here, the UN has a rare opportunity. As a coordinating body, it can channel expertise, attention, and potentially funding toward long-term priorities that markets underserve—foundational research, global safety standards, and capacity-building initiatives. Such efforts can empower Member States, especially in the Global South, to participate in and benefit from AI development rather than remain dependent on dominant actors. Aligning AI progress with public interest, equity, and global stability requires the kind of multilateral leadership the UN is uniquely positioned to provide.

The unfolding AI bubble is more than a market phenomenon. It is a test of global governance, international coordination, and collective foresight. Through its scientific panel and convening power, the UN can bridge the divide between rapid technological ambition and inclusive, evidence-based oversight. If the international community prioritizes collaboration, diverse voices, and rigorous analysis, AI’s speculative momentum can be transformed into responsible innovation. The choices made now will determine whether AI becomes a tool for equitable progress or another arena where wealth and influence concentrate at the expense of global well-being.